- Target audience

- 1. Introduction to JBoss DNA

- I. Developers and Contributors

- II. JBoss DNA Core

- III. JBoss DNA JCR

- IV. Connector Library

- V. Sequencer Library

- VI. MIME Type Detector Library

- 27. Looking to the future

This reference guide is for the developers of JBoss DNA and those users that want to have a better understanding of how JBoss DNA works or how to extend the functionality. For a higher-level introduction to JBoss DNA, see the Getting Started document.

If you have any questions or comments, please feel free to contact JBoss DNA's user mailing list or use the user forums. If you'd like to get involved on the project, join the mailing lists, download the code and get it building, and visit our JIRA issue management system. If there's something in particular you're interested in, talk with the community - there may be others interested in the same thing.

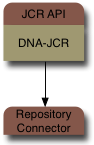

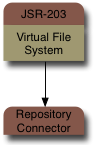

JBoss DNA is a JCR implementation that provides access to content stored in many different kinds of systems. A JBoss DNA repository isn't yet another silo of isolated information, but rather it's a JCR view of the information you already have in your environment: files systems, databases, other repositories, services, applications, etc.

To your applications, JBoss DNA looks and behaves like a regular JCR repository. Using the standard JCR API, applications can search, navigate, version, and listen for changes in the content. But under the covers, JBoss DNA gets its content by federating multiple back-end systems (like databases, services, other repositories, etc.), allowing those systems to continue "owning" the information while ensuring the unified repository stays up-to-date and in sync.

Of course when you start providing a unified view of all this information, you start recognizing the need to store more information, including metadata about and relationships between the existing content. JBoss DNA lets you do this, too. And JBoss DNA even tries to help you discover more about the information you already have, especially the information wrapped up in the kinds of files often found in enterprise systems: service definitions, policy files, images, media, documents, presentations, application components, reusable libraries, configuration files, application installations, databases schemas, management scripts, and so on. As files are loaded into the repository, you can make JBoss DNA automatically sequence these files to extract from their content meaningful information that can be stored in the repository, where it can then be searched, accessed, and analyzed using the JCR API.

This document goes into detail about how JBoss DNA works to provide these capabilities. It also talks in detail about many of the parts within JBoss DNA - what they do, how they work, and how you can extend or customize the behavior. In particular, you'll learn about JBoss DNA connectors and sequencers, how you can use the implementations included in JBoss DNA, and how you can write your own to tailor JBoss DNA for your needs.

So whether your a developer on the project, or you're trying to learn the intricate details of how JBoss DNA works, this document hopefully serves a good reference for developers on the project.

JBoss DNA repositories can be used in a variety of applications. One of the more obvious use cases for a metadata repository is in provisioning and management, where it's critical to understand and keep track of the metadata for models, database, services, components, applications, clusters, machines, and other systems used in an enterprise. Governance takes that a step farther, by also tracking the policies and expectations against which performance of the systems described by the repository can be verified. In these cases, a repository is an excellent mechanism for managing this complex and highly-varied information.

But these large and complex use cases aren't the only way to use a JBoss DNA repository. You could use an embedded JBoss DNA repository to manage configuration information for an application, or you could use JBoss DNA just provide a JCR interface on top of a few non-JCR systems.

The point is that JBoss DNA can be used in many different ways, ranging from the very tiny embedded repository to a large and distributed enterprise-grade repository. The choice is yours.

Before we dive into more detail about JBoss DNA and metadata repositories, it's probably useful to explain what we mean by the term "metadata." Simply put, metadata is the information you need to manage something. For example, it's the information needed to configure an operating system, or the description of the information in an LDAP tree, or the topology of your network. It's the configuration of an application server or enterprise service bus. It's the steps involved in validating an application before it can go into production. It's the description of your database schemas, or of your services, or of the messages going in and coming out of a service. JBoss DNA is designed to be a repository for all this (and more).

There are a couple of important things to understand about metadata. First, many systems manage (and frequently change) their own metadata and information. Databases, applications, file systems, source code management systems, services, content management systems, and even other repositories are just a few types of systems that do this. We can't pull the information out and duplicate it, because then we risk having multiple copies that are out-of-sync. Ideally, we could access all of this information through a homogenous API that also provides navigation, caching, versioning, search, and notification of changes. That would make our lives significantly easier.

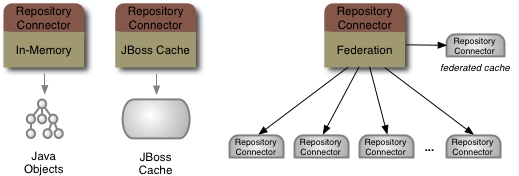

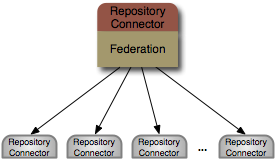

What we want is federation. We can connect to these back-end systems to dynamically access the content and project it into a single, unified repository. We can also cache it for faster access, as long as the cache can be invalidated based upon time or event. But we also need to maintain a clear picture of where all the bits come from, so users can be sure they're looking at the right information. And we need to make it as easy as possible to write new connectors, since there are a lot of systems out there that have information we want to federate.

The second important characteristic of the metadata is that a lot of it is represented as files, and there are a lot of different file formats. These include source code, configuration files, web pages, database schemas, XML schemas, service definitions, policies, documents, spreadsheets, presentations, images, audio files, workflow definitions, business rules, and on and on. And logically if files contain metadata, we want to add those files to our metadata repository. The problem is, all that metadata is tied up as blobs in the repository. Ideally, our repository would automatically extract from those files the content that's most useful to us, and place that content inside the repository where it can be much more easily used, searched, related, and analyzed. JBoss DNA does exactly this via a process we call sequencing, and it's an important part of a metadata repository.

The third important characteristic of metadata is that it rarely stays the same. Different consumers of the information need to see different views of it. Metadata about two similar systems is not always the same. The metadata often needs to be tagged or annotated with additional information. And the things being described often change over time, meaning the metadata has to change, too. As a result, the way in which we store and manage the metadata has to be flexible and able to adapt to our ever-changing needs, and the object model we use to interact with the repository must accommodate these needs. The graph-based nature of the JCR API provides this flexibility while also giving us the ability to constrain information when it needs to be constrained.

There are a lot of choices for how applications can store information persistently so that it can be accessed at a later time and by other processes. The challenge developers face is how to use an approach that most closely matches the needs of their application. This choice becomes more important as developers choose to focus their efforts on application-specific logic, delegating much of the responsibilities for persistence to libraries and frameworks.

Perhaps one of the easiest techniques is to simply store information in files . The Java language makes working with files relatively easy, but Java really doesn't provide many bells and whistles. So using files is an easy choice when the information is either not complicated (for example property files), or when users may need to read or change the information outside of the application (for example log files or configuration files). But using files to persist information becomes more difficult as the information becomes more complex, as the volume of it increases, or if it needs to be accessed by multiple processes. For these situations, other techniques often have more benefits.

Another technique built into the Java language is Java serialization , which is capable of persisting the state of an object graph so that it can be read back in at a later time. However, Java serialization can quickly become tricky if the classes are changed, and so it's beneficial usually when the information is persisted for a very short period of time. For example, serialization is sometimes used to send an object graph from one process to another. Using serialization for longer-term storage of information is more risky.

One of the more popular and widely-used persistence technologies is the relational database. Relational database management systems have been around for decades and are very capable. The Java Database Connectivity (JDBC) API provides a standard interface for connecting to and interacting with relational databases. However, it is a low-level API that requires a lot of code to use correctly, and it still doesn't abstract away the DBMS-specific SQL grammar. Also, working with relational data in an object-oriented language can feel somewhat unnatural, so many developers map this data to classes that fit much more cleanly into their application. The problem is that manually creating this mapping layer requires a lot of repetitive and non-trivial JDBC code.

Object-relational mapping libraries automate the creation of this mapping layer and result in far less code that is much more maintainable with performance that is often as good as (if not better than) handwritten JDBC code. The new Java Persistence API (JPA) provide a standard mechanism for defining the mappings (through annotations) and working with these entity objects. Several commercial and open-source libraries implement JPA, and some even offer additional capabilities and features that go beyond JPA. For example, Hibernate is one of the most feature-rich JPA implementations and offers object caching, statement caching, extra association mappings, and other features that help to improve performance and usefulness. Plus, Hibernate is open-source (with support offered by JBoss).

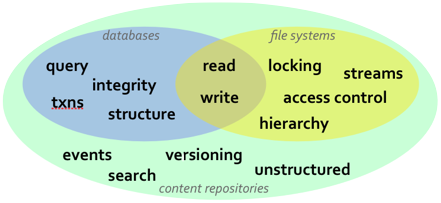

While relational databases and JPA are solutions that work well for many applications, they are more limited in cases when the information structure is highly flexible, the structure is not known a priori, or that structure is subject to frequent change and customization. In these situations, content repositories may offer a better choice for persistence. Content repositories are almost a hybrid with the storage capabilities of relational databases and the flexibility offered by other systems, such as using files. Content repositories also typically provide other capabilities as well, including versioning, indexing, search, access control, transactions, and observation. Because of this, content repositories are used by content management systems (CMS), document management systems (DMS), and other applications that manage electronic files (e.g., documents, images, multi-media, web content, etc.) and metadata associated with them (e.g., author, date, status, security information, etc.). The Content Repository for Java technology API provides a standard Java API for working with content repositories. Abbreviated "JCR", this API was developed as part of the Java Community Process under JSR-170 and is being revised under JSR-283.

The JCR API provides a number of information services that are needed by many applications, including: read and write access to information; the ability to structure information in a hierarchical and flexible manner that can adapt and evolve over time; ability to work with unstructured content; ability to (transparently) handle large strings; notifications of changes in the information; search and query; versioning of information; access control; integrity constraints; participation within distributed transactions; explicit locking of content; and of course persistence.

The roadmap for JBoss DNA is managed in the project's JIRA instance . The roadmap shows the different tasks, requirements, issues and other activities that have been targeted to each of the upcoming releases. (The roadmap report always shows the next three releases.)

By convention, the JBoss DNA project team periodically review JIRA issues that aren't targeted to a release, and then schedule them based upon current workload, severity, and the roadmap. And if we review an issue and don't know how to target it, we target it to the Future Releases bucket.

At the start of a release, the project team reviews the roadmap, identifies the goals for the release, and targets (or retargets) the issues appropriately.

Rather than use a single formal development methodology, the JBoss DNA project incorporates those techniques, activities, and processes that are practical and work for the project. In fact, the committers are given a lot of freedom for how they develop the components and features they work on.

Nevertheless, we do encourage familiarity with several major techniques, including:

Agile software development includes those software methodologies (e.g., Scrum) that promote development iterations and open collaboration. While the JBoss DNA project doesn't follow these closely, we do emphasize the importance of always having running software and using running software as a measure of progress. The JBoss DNA project also wants to move to more frequent releases (on the order of 4-6 weeks)

Test-driven development (TDD) techniques encourage first writing test cases for new features and functionality, then changing the code to add the new features and functionality, and finally the code is refactored to clean-up and address any duplication or inconsistencies.

Behavior-driven development (BDD) is an evolution of TDD, where developers specify the desired behaviors first (rather than writing "tests"). In reality, this BDD adopts the language of the user so that tests are written using words that are meaningful to users. With recent test frameworks (like JUnit 4.4), we're able to write our unit tests to express the desired behavior. For example, a test class for sequencer implementation might have a test method

shouldNotThrowAnErrorWhenStreamIsNull(), which is very easy to understand the intent. The result appears to be a larger number of finer-grained test methods, but which are more easily understood and easier to write. In fact, many advocates of BDD argue that one of the biggest challenges of TDD is knowing what tests to write in the beginning, whereas with BDD the shift in focus and terminology make it easier for more developers to enumerate the tests they need.Lean software development is an adaptation of lean manufacturing techniques, where emphasis is placed on eliminating waste (e.g., defects, unnecessary complexity, unnecessary code/functionality/features), delivering as fast as possible, deferring irrevocable decisions as much as possible, continuous learning (continuously adapting and improving the process), empowering the team (or community, in our case), and several other guidelines. Lean software development can be thought of as an evolution of agile techniques in the same way that behavior-driven development is an evolution of test-driven development. Lean techniques help the developer to recognize and understand how and why features, bugs, and even their processes impact the development of software.

JBoss DNA consists of the following modules:

dna-jcr contains JBoss DNA's implementation of the JCR API. If you're using JBoss DNA as a JCR repository, this is the top-level dependency that you'll want to use. The module defines all required dependencies, except for the repository connector(s) and any sequencer implementations needed by your configuration. As we'll see later on, using JBoss DNA as a JCR repository is easy: simply create a configuration, start the JCR engine, get the JCR Repository object for your repository, and then use the JCR API. This module also contains the Jackrabbit JCR API unit tests that verify the behavior of the JBoss DNA implementation. As DNA does not fully implement the JCR 1.0.1 specification, there are a series of tests that are currently commented out in this module. The

dna-jcr-tckmodule contains all of these tests.dna-repository provides the core DNA graph engine and services for managing repository connections, sequencers, MIME type detectors, and observation. If you're using JBoss DNA repositories via our graph API rather than JCR, then this is where you'd start.

dna-graph defines the Application Programming Interface (API) for JBoss DNA's low-level graph model, including a DSL-like API for working with graph content. This module also defines the APIs necessary to implement custom connectors, sequencers, and MIME type detectors.

dna-cnd provides a self-contained utility for parsing CND (Compact Node Definition) files and transforming the node definitions into a graph notation compatible with JBoss DNA's JCR implementation.

dna-common is a small low-level library of common utilities and frameworks, including logging, progress monitoring, internationalization/localization, text translators, component management, and class loader factories.

There are several modules that provide system- and integration-level tests:

dna-jcr-tck provides a separate testing project that executes all Jackrabbit JCR TCK tests on a nightly basis to track implementation progress against the JCR 1.0 specification. This module will likely be retired when the

dna-jcrimplementation is complete.dna-integration-tests provides a home for all of the integration tests that involve more components that just unit tests. Integration tests are often more complicated, take longer, and involve testing the integration and functionality of multiple components (whereas unit tests focus on testing a single class or component and may use stubs or mock objects to isolate the code being tested from other related components).

The following modules are optional extensions that may be used selectively and as needed (and are located in the source

under the

extensions/

directory):

dna-classloader-maven is a small library that provides a

ClassLoaderFactoryimplementation that can createjava.lang.ClassLoaderinstances capable of loading classes given a Maven Repository and a list of Maven coordinates. The Maven Repository can be managed within a JCR repository.dna-common-jdbc contains several helpful utility classes for interacting with JDBC connections.

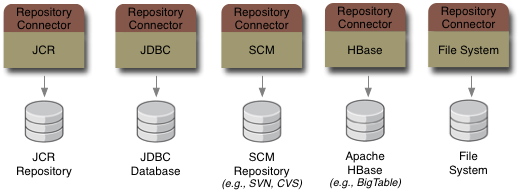

dna-connector-federation is a DNA repository connector that federates, integrates and caches information from multiple sources (via other repository connectors).

dna-connector-filesystem is a DNA repository connector that provides read-only access to file systems, allowing their structure and data to be viewed as repository content.

dna-connector-jdbc-metadata is a prototype DNA repository connector that provides read-only access to metadata from relational databases through a JDBC connection. This is still under development.

dna-connector-jbosscache is a DNA repository connector that manages content within a JBoss Cache instance. JBoss Cache is a powerful cache implementation that can serve as a distributed cache and that can persist information. The cache instance can be found via JNDI or created and managed by the connector.

dna-connector-store-jpa is a DNA sequencer that provides for persistent storage and access of DNA content in a relational database. This connector is based on JPA technology.

dna-connector-svn is a prototype DNA sequencer that obtains content from a Subversion repository, providing that content in the form of

nt:fileandnt:foldernodes.dna-sequencer-zip is a DNA sequencer that extracts from ZIP archives the files (with content) and folders.

dna-sequencer-xml is a DNA sequencer that extracts the structure and content from XML files.

dna-sequencer-images is a DNA sequencer that extracts the image metadata (e.g., size, date, etc.) from PNG, JPEG, GIF, BMP, PCS, IFF, RAS, PBM, PGM, and PPM image files.

dna-sequencer-mp3 is a DNA sequencer that extracts metadata (e.g., author, album name, etc.) from MP3 audio files.

dna-sequencer-java is a DNA sequencer that extracts the package, class/type, member, documentation, annotations, and other information from Java source files.

dna-sequencer-msoffice is a DNA sequencer that extracts metadata and summary information from Microsoft Office documents. For example, the sequencer extracts from a PowerPoint presentation the outline as well as thumbnails of each slide. Microsoft Word and Excel files are also supported.

dna-sequencer-cnd is a DNA sequencer that extracts JCR node definitions from JCR Compact Node Definition (CND) files.

dna-sequencer-jbpm-jpdl is a prototype DNA sequencer that extracts process definition metadata from jBPM process definition language (jPDL) files. This is still under development.

dna-sequencer-java is a DNA sequencer that extracts the structure (methods, fields) from Java source files.

dna-mimetype-detector-aperture is a DNA MIME type detector that uses the Aperture library to determine the best MIME type from the filename and file contents.

dna-web-jcr-rest provides a set of JSR-311 (JAX-RS) objects that form the basis of a RESTful server for Java Content Repositories. This project provides integration with DNA's JCR implementation (of course) but also contains a service provider interface (SPI) that can be used to integrate other JCR implementations with these RESTful services in the future. For ease of packaging, these classes are provided as a JAR that can be placed in the WEB-INF/lib of a deployed RESTful server WAR.

dna-web-jcr-rest-war wraps the RESTful services from the dna-web-jcr-rest JAR into a WAR and provides in-container integration tests. This project can be consulted as a template for how to deploy the RESTful services in a custom implementation.

There are also documentation modules (located in the source under the

docs/

directory):

docs-getting-started is the project with the DocBook source for the JBoss DNA Getting Started document.

docs-getting-started-examples is the project with the Java source for the example application used in the JBoss DNA Getting Started document.

docs-reference-guide is the project with the DocBook source for this document, the JBoss DNA Reference Guide document.

Finally, there is a module that represents the whole JBoss DNA project:

dna is the parent project that aggregates all of the other projects and that contains some asset files to create the necessary Maven artifacts during a build.

Each of these modules is a Maven project with a group ID of

org.jboss.dna

. All of these projects correspond to artifacts in the

JBoss Maven 2 Repository

.

The JBoss DNA project uses a number of process, tools, and procedures to assist in the development of the software. This portion of the document focuses on these aspects and will help developers and contributors obtain the source code, build locally, and contribute to the project.

If you're not contributing to the project but are still developing custom connectors or sequencers. this information may be helpful in establishing your own environment.

Table of Contents

The JBoss DNA project uses Maven as its primary build tool, Subversion for its source code repository, JIRA for the issue management and bug tracking system, and Hudson for the continuous integration system. We do not stipulate a specific integrated development environment (IDE), although most of us use Eclipse and rely upon the code formatting and compile preferences to ensure no warnings or errors.

The rest of this chapter talks in more detail about these different tools and how to set them up.

Currently, JBoss DNA is developed and built using JDK 5. So if you're trying to get JBoss DNA to compile locally, you should make sure you have the JDK 5 installed and are using it. If you're a contributor, you should make sure that you're using JDK 5 before committing any changes.

Note

You should be able to use the latest JDK, which is currently JDK 6. It is possible to build JBoss DNA using JDK 6 without any code changes, but it's not our official JDK (yet).

Why do we build using JDK 5 and not 6? The main reason is that if we were to use JDK 6, then JBoss DNA couldn't really be used in any applications or projects that still used JDK 5. Plus, anybody using JDK 6 can still use JBoss DNA. However, considering that the end-of-life for Java 5 is October 2009, we may be switching to Java 6 sometime in 2009.

When installing a JDK, simply follow the procedure for your particular platform. On most platforms, this should set the

JAVA_HOME environment variable. But if you run into any problems, first check that this environment

variable was set to the correct location, and then check that you're running the version you expect by running

the following command:

$ java -version

If you don't see the correct version, double-check your JDK installation.

JBoss DNA uses JIRA as its bug tracking, issue tracking, and project management tool. This is a browser-based tool, with very good functionality for managing the different tasks. It also serves as the community's roadmap, since we can define new features and manage them along side the bugs and other issues. Although most of the issues have been created by community members, we encourage any users to suggest new features, log defects, or identify shortcomings in JBoss DNA.

The JBoss DNA community also encourages its members to work only issues that are managed in JIRA, and preferably those that are targeted to the current release effort. If something isn't in JIRA but needs to get done, then create an issue before you start working on the code changes. Once you have code changes, you can upload a patch to the JIRA issue if the change is complex, if you want someone to review it, or if you don't have commit privileges and have fixed a bug.

JBoss DNA uses Subversion as its source code management system, and specifically the instance at JBoss.org. Although you can view the trunk of the Subversion repository directly (or using FishEye) through your browser, it order to get more than just a few files of the latest version of the source code, you probably want to have an SVN client installed. Several IDE's have SVN support included (or available as plugins), but having the command-line SVN client is recommended. See http://subversion.tigris.org/ for downloads and instructions for your particular platform.

Here are some useful URLs for the JBoss DNA Subversion:

Table 2.1. SVN URLs for JBoss DNA

| Repository | URL |

|---|---|

| Anonymous Access URL | http://anonsvn.jboss.org/repos/dna/trunk/ |

| Secure Developer Access URL | http://fisheye.jboss.org/browse/DNA/trunk/ |

| FishEye Code Browser | https://svn.jboss.org/repos/dna/trunk/ |

When committing to SVN, be sure to include in a commit comment that includes the JIRA issue that the commit applies to and a very good and thorough description of what was done. It only takes a minute or two to be very clear about the change. And including the JIRA issue (e.g., "DNA-123") in the comment allows the JIRA system to track the changes that have been made for each issue.

Also, any single SVN commit should apply to one and only one JIRA issue. Doing this helps ensure that each commit is atomic and focused on a single activity. There are exceptions to this rule, but they are rare.

Sometimes you may have some local changes that you don't want to (or aren't allowed to) commit. You can make a patch file

and upload it to the JIRA issue, allowing other committers to review the patch. However, to ensure that patches are easily

applied, please use SVN to create the patch. To do this, simply do the following in the top of the codebase (e.g., the

trunk directory):

$ svn diff . > ~/DNA-000.patch

where DNA-000 represents the DNA issue number. Note that the above command places the patch file in your home directory,

but you can place the patch file anywhere. Then, simply use JIRA to attach the patch file to the particular issue, also adding

a comment that describes the version number against which the patch was created.

To apply a patch, you usually want to start with a workspace that has no changes. Download the patch file, then issue the following command (again, from the top-level of the workspace):

$ patch -E -p0 < ~/DNA-000.patch

The "-E" option specifies to delete any files that were made empty by the application of the patch, and the "-p0" option instructs the patch tool to not change any of the paths. After you run this command, your working area should have the changes defined by the patch.

Several contributors are using Git on their local development machines. This allows the developer to use Git branches, commits, merges, and other Git tools, but still using the JBoss DNA Subversion repository. For more information, see our blog posts on the topic.

JBoss DNA uses Maven 2 for its build system, as is this example. Using Maven 2 has several advantages, including the ability to manage dependencies. If a library is needed, Maven automatically finds and downloads that library, plus everything that library needs. This means that it's very easy to build the examples - or even create a maven project that depends on the JBoss DNA JARs.

To use Maven with JBoss DNA, you'll need to have JDK 5 or 6 and Maven 2.0.9 (or higher).

Maven can be downloaded from http://maven.apache.org/, and is installed by unzipping the

maven-2.0.9-bin.zip file to a convenient location on your local disk. Simply add $MAVEN_HOME/bin

to your path and add the following profile to your ~/.m2/settings.xml file:

<settings>

<profiles>

<profile>

<id>jboss.repository</id>

<activation>

<property>

<name>!jboss.repository.off</name>

</property>

</activation>

<repositories>

<repository>

<id>snapshots.jboss.org</id>

<url>http://snapshots.jboss.org/maven2</url>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

<repository>

<id>repository.jboss.org</id>

<url>http://repository.jboss.org/maven2</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>repository.jboss.org</id>

<url>http://repository.jboss.org/maven2</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</pluginRepository>

<pluginRepository>

<id>snapshots.jboss.org</id>

<url>http://snapshots.jboss.org/maven2</url>

<snapshots>

<enabled>true</enabled>

</snapshots>

</pluginRepository>

</pluginRepositories>

</profile>

</profiles>

</settings>

This profile informs Maven of the two JBoss repositories (snapshots and releases) that contain all of the JARs for JBoss DNA and all dependent libraries.

While you're adding $MAVEN_HOME/bin to your path, you should also set the $MAVEN_OPTS environment variable

to "-Xmx256m". If you don't do this, you'll likely see an java.lang.OutOfMemoryError sometime during a full

build.

Note

The JBoss Maven repository provides a central location for not only the artifacts produced by the JBoss.org projects (well, at least those that use Maven), but also is where those projects can place the artifacts that they depend on. JBoss DNA has a policy that the source code and JARs for all dependencies must be loaded into the JBoss Maven repository. It may be a little bit more work for the developers, but it does help ensure that developers have easy access to the source and that the project (and dependencies) can always be rebuilt when needed.

For more information about the JBoss Maven repository, including instructions for adding source and JAR artifacts, see the JBoss.org Wiki.

There are just a few commands that are useful for building JBoss DNA (and it's subprojects).

Usually, these are issued while at the top level of the code (usually just below trunk/), although issuing

them inside a subproject just applies to that subproject.

Table 2.2. Useful Maven commands

| Command | Description |

|---|---|

mvn clean | Clean up all built artifacts (e.g., the target/ directory in each project) |

mvn clean install | Clean up all built artifacts, then compile, run the unit tests, and install the resulting JAR artifact(s)

into your local Maven repository (e.g, usually ~/.m2/repository).

|

JBoss DNA's continuous integration is done with several Hudson jobs on JBoss.org. These jobs run periodically and basically run the Maven build process. Any build failures or test failures are reported, as are basic statistics and history for each job.

Table 2.3. Continuous integration jobs

| Job | Description |

|---|---|

| Continuous on JDK 5 | Continuous build that runs after changes are committed to SVN. SVN is polled every 15 minutes. |

| Nightly on JDK 5 | Build that runs every night (usually around 2 a.m. EDT), regardless of whether changes have been committed to SVN since the previous night. |

Many of the JBoss DNA committers use the Eclipse IDE, and all project files required by Eclipse are committed in SVN, making it pretty easy to get an Eclipse workspace running with all of the JBoss DNA projects. Many of the JBoss DNA committers use the Eclipse IDE, and all project files required by Eclipse are committed in SVN, making it pretty easy to get an Eclipse workspace running with all of the JBoss DNA projects.

We're using the latest released version of Eclipse (3.4, called "Ganymede"), available from Eclipse.org. Simply follow the instructions for your platform.

After Eclipse is installed, create a new workspace. Before importing the JBoss DNA projects, import (via

File->Import->Preferences) the subset of the Eclipse preferences by importing the

eclipse-preferences.epf file (located under trunk). Then, open the Eclipse preferences and

open the Java->Code Style-> Formatter preference page, and press the "Import" button and

choose the eclipse-code-formatter-profile.xml file (also located under trunk). This will load the code

formatting preferences for the JBoss DNA project.

Then install Eclipse plugins for SVN and Maven. (Remember, you will have to restart Eclipse after installing them.) We use the following plugins:

Table 2.4. Eclipse Subversion Plugins

| Eclipse Plugins | Update Site URLs |

|---|---|

| Subversive SVN Client | http://www.polarion.org/projects/subversive/download/eclipse/2.0/update-site/ http://www.polarion.org/projects/subversive/download/integrations/update-site/ |

| Maven Integration for Eclipse | http://m2eclipse.sonatype.org/update/ |

After you check out the JBoss DNA codebase, you can import the JBoss DNA Maven projects into Eclipse as Eclipse projects.

To do this, go to "File->Import->Existing Projects", navigate to the trunk/ folder in the import wizard,

and then check each of the subprojects that you want to have in your workspace.

Don't forget about the projects under extensions/ or docs/.

This section outlines the basic process of releasing JBoss DNA. This must be done either by the project lead or only after communicating with the project lead.

Before continuing, your local workspace should contain no changes and should be a perfect reflection of Subversion. You can verify this by getting the latest from Subversion

$ svn update

and ensuring that you have no additional changes with

$ svn status

You may also want to note the revision number for use later on in the process. The release number is returned by

the svn update command, but may also be found using

$ svn info

At this point, you're ready to verify that everything builds normally.

By default, the project's Maven build process is does not build the documentation, JavaDocs, or assemblies. These take extra time, and most of our builds don't require them. So the first step of releasing JBoss DNA is to use Maven to build all of regular artifacts (e.g., JARs) and these extra documents and assemblies.

Note

Before running Maven commands to build the releases, increase the memory available to Maven with this command:

$ export MAVEN_OPTS=-Xmx256m

To perform this complete build, issue the following command while in the target/ directory:

$ mvn -P assembly clean javadoc:javadoc install

This command runs the "clean", "javadoc:javadoc", and "install" goals using the "assembly" profile, which adds the production of JavaDocs, the Getting Started document, the Reference Guide document, the Getting Started examples, and several ZIP archives. The order of the goals is important, since the "install" goal attempts to include the JavaDoc in the archives.

After this build has completed, verify that the assemblies under target/ have actually been created and that

they contain the correct information.

At this point, we know that the actual Maven build process is building

everything we want and will complete without errors. We can now proceed with preparing for the release.

The version being released should match the JIRA road map. Make sure that all issues related to the release are closed. The project lead should be notified and approve that the release is taking place.

The next step is to ensure that all information in the POM is correct and contains all the information required for the release process. This is called a dry run, and is done with the Maven "release" plugin:

$ mvn -Passembly release:prepare -DdryRun=true

This may download a lot of Maven plugins if they already haven't been downloaded, but it will eventually prompt you for

the release version of each of the Maven projects, the tag name for the release, and the next development versions

(again for each of the Maven projects). The default values are probably acceptable; if not, then check that the

"<version>" tags in each of the POM files is correct and end with "-SNAPSHOT".

After the dry run completes you should clean up the files that the release plugin created in the dry run:

$ mvn -Passembly release:clean

Run the prepare step (without the dryRun option):

$ mvn -Passembly release:prepare

You will again be prompted for the release versions and tag name. These should be the same as what was used during the dry run. This will run the same steps as the dry run, with the additional step of tagging the release in SVN.

If there are any problems during this step, you should go back and try the dry run option. But after this runs successfully,

the release will be tagged in SVN, and the pom.xml files in SVN under /trunk will have the

next version in the "<version>" values.

However, the artifacts for the release are not yet published. That's the next step.

At this point, the release's artifacts need to be published to the JBoss Maven repository. This next command check outs the

files from the release tag created earlier (into a trunk/target/checkout directory), runs a build, and then

deploys the generated artifacts. Note that this ensures that the artifacts are built from the tagged code.

$ mvn release:perform -DuseReleaseProfile=false

Note

If during this process you get an error finding the released artifacts in your local Maven repository, you may

need to go into the trunk/target/checkout folder and run $ mvn install. This is a simple

workaround to make the artifacts available locally. Another option to try is adding -Dgoals=install,assembly

to the $ mvn release:perform... command above.

The artifacts are deployed to the local file system, which is comprised of a local checkout of the JBoss Maven2 repository

in a location specified by a combination of the <distributionManagement> section of several pom.xml

files and your personal settings.xml file. Once this Maven command completes, you will need to

commit the new files after they are deployed. For more information, see the

JBoss wiki.

At this point, the software has been released and tagged, and it's been deployed to a local checked-out copy of the JBoss DNA Maven 2 repository (via the "<distribution>" section of the pom.xml files). Those need to be committed into the Maven 2 repository using SVN. And finally, the last thing is to publish the release onto the project's downloads and documentation pages.

The assemblies of the source, binaries, etc. also need to be published onto the http://www.jboss.org/dna/downloads.html area of the the project page. This process is expected to change, as JBoss.org improves its infrastructure.

In this chapter, we described the various aspects of developing code for the JBoss DNA project. Before we start talking about some of the details of JBoss DNA repositories, connectors, and sequencers, we'll first talk about some very ubiquitous information: how does JBoss DNA load all of the extension classes? This is the topic of the next chapter.

The JBoss DNA project uses automated testing to verify that the software is doing what it's supposed to and not doing what it shouldn't do. These automated tests are run continuously and also act as regression tests, ensuring that we known if any problems we find and fix reappear later. All of our tests are executed as part of our Maven build process, and the entire build process (including the tests) is automatically run using Hudson continuous integration system.

Unit tests verify the behavior of a single class (or small set of classes) in isolation from other classes. We use the JUnit 4.4 testing framework, which has significant improvements over earlier versions and makes it very easy to quickly write unit tests with little extra code. We also frequently use the Mockito library to help create mock implementations of other classes that are not under test but are used in the tests.

Unit tests should generally run quickly and should not require large assemblies of components. Additionally, they may rely upon the file resources included in the project, but these tests should require no external resources (like databases or servers). Note that our unit tests are run during the "test" phase of the standard Maven lifecycle. This means that they are executed against the raw .class files created during complication.

Developers are expected to run all of the JBoss DNA unit tests in their local environment before committing changes to SVN.

So, if you're a developer and you've made changes to your local copy of the source, you can run those tests that are

related to your changes using your IDE or with Maven (or any other mechanism). But before you commit your changes,

you are expected to run a full Maven build using mvn clean install (in the "trunk/" directory).

Please do not rely upon continuous integration to run all of the tests for you - the CI

system is there to catch the occasional mistakes and to also run the integration tests.

While unit tests test individual classes in (relative) isolation, the purpose of integration tests are to verify that assemblies of classes and components are behaving correctly. These assemblies are often the same ones that end users will actually use. In fact, integration tests are executed during the "integration-test" phase of the standard Maven lifecycle, meaning they are executed against the packaged JARs and artifacts of the project.

Integration tests also use the JUnit 4.4 framework, so they are again easy to write and follow the same pattern as unit tests. However, because they're working with larger assemblies of components, they often will take longer to set up, longer to run, and longer to tear down. They also may require initializing "external resources", like databases or servers.

Note, that while external resources may be required, care should be taken to minimize these dependencies and to

ensure that most (if not all) integration tests may be run by anyone who downloads the source code. This means

that these external resources should be available and set up within the tests. For example, use in-memory databases

where possible. Or, if a database is required, use an open-source database (e.g., MySQL or PostgreSQL). And when

these external resources are not available, it should be obvious from the test class names and/or test method names

that it involved an external resource (e.g., "MySqlConnectorIntegrationTest.shouldFindNodeStoredInDatabase()").

As mentioned in the introduction, the JBoss DNA project doesn't follow any one methodology or process. Instead, we simply have a goal that as much code as possible is tested to ensure it behaves as expected. Do we expect 100% of the code is covered by automated tests? No, but we do want to test as much as we can. Maybe a simple JavaBean class doesn't need many tests, but any class with non-trivial logic should be tested.

We do encourage writing tests either before or while you write the code. Again, we're not blindly following a methodology. Instead, there's a very practical reason: writing the tests early on helps you write classes that are testable. If you wait until after the class (or classes) are done, you'll probably find that it's not easy to test all of the logic (especially the complicated logic).

Another suggestion is to write tests so that they specify and verify the behavior that is expected from a class or component.

One challenge developers often have is knowing what they should even test and what the tests should look like.

This is where Behavior-driven development (BDD)

helps out. If you think about what a class' behaviors are supposed to be (e.g., requirements), simply capture those

requirements as test methods (with no implementations). For example, a test class for sequencer

implementation might have a test method shouldNotThrowAnErrorWhenTheSuppliedStreamIsNull() { }. Then, after you enumerate

all the requirements you can think of, go back and start implementing the test methods.

If you look at the existing test cases, you'll find that the names of the unit and integration tests in JBoss DNA follow a naming style, where the test method names are readable sentences. Actually, we try to name the test methods and the test classes such that they form a concisely-worded requirement. For example,

InMemorySequencerTest.shouldNotThrowAnErrorWhenTheSuppliedStreamIsNull()

is easily translated into a readable requirement:

InMemorySequencer should not throw an error when the supplied stream is null.

In fact, at some point in the future, we'd like to process the source to automatically generate a list of the behavior specifications that are asserted by the tests.

But for now, we write tests - a lot of them. And by following a few simple conventions and practices, we're able to do it quickly and in a way that makes it easy to understand what the code is supposed to do (or not do).

Many Java specifications provide TCK test suites that can be used to check or verify that an implementation correctly implements the API or SPI defined by the specification. These TCK tests vary by technology, but JSR-170 does provide TCK tests that ensure that a JCR repository implementation exhibits the correct and expected behavior.

JBoss DNA has not yet passed enough of the TCK tests to publish the results. We still have to implement queries, which is a required feature of Level 1 repositories. However, suffice to say that JBoss DNA has passed many of the individual tests that make up the Level 1 and Level 2 tests, and it is a major objective of the next release to pass the remaining Level 1 and Level 2 tests (along with some other optional features).

JBoss DNA also frequently runs the JCR unit tests from the Apache Jackrabbit project. (Those these tests are not

the official TCK, they apparently are used within the official TCK.) These unit tests are set up in the

dna-jcr-tck project.

The JBoss DNA project organizes the codebase into a number of subprojects. The most fundamental are those core libraries, including the graph API, connector framework, sequencing framework, as well as the configuration and engine in which all the components run. These are all topics covered in this part of the document.

The JBoss DNA implementation of the JCR API as well as some other JCR-related components are covered in the next part.

The various components of JBoss DNA are designed as plain old Java objects, or POJOs. And rather than making assumptions about their environment, each component instead requires that any external dependencies necessary for it to operate must be supplied to it. This pattern is known as Dependency Injection, and it allows the components to be simpler and allows for a great deal of flexibility and customization in how the components are configured.

The approach that JBoss DNA takes is simple: a simple POJO that represents the everything about the environment

in which components operate. Called ExecutionContext, it contains references to most of the essential

facilities, including: security (authentication and authorization); namespace registry; name factories; factories

for properties and property values; logging; and access to class loaders (given a classpath).

Most of the JBoss DNA components require an ExecutionContext and thus have access to all these facilities.

The ExecutionContext is a concrete class that is instantiated with the no-argument constructor:

public classExecutionContextimplements ClassLoaderFactory { /** * Create an instance of an execution context, with default implementations for all components. */ publicExecutionContext() { ... } /** * Get the factories that should be used to create values for {@link Property properties}. * @return the property value factory; never null */ public ValueFactories getValueFactories() {...} /** * Get the namespace registry for this context. * @return the namespace registry; never null */ public NamespaceRegistry getNamespaceRegistry() {...} /** * Get the factory for creating {@link Property} objects. * @return the property factory; never null */ public PropertyFactory getPropertyFactory() {...} /** * Get the security context for this environment. * @return the security context; nevernull*/ public SecurityContext getSecurityContext() {...} /** * Return a logger associated with this context. This logger records only those activities within the * context and provide a way to capture the context-specific activities. All log messages are also * sent to the system logger, so classes that log via this mechanism should <i>not</i> also * {@link Logger#getLogger(Class) obtain a system logger}. * @param clazz the class that is doing the logging * @return the logger, named afterclazz; never null */ public Logger getLogger( Class<?> clazz ) {...} /** * Return a logger associated with this context. This logger records only those activities within the * context and provide a way to capture the context-specific activities. All log messages are also * sent to the system logger, so classes that log via this mechanism should <i>not</i> also * {@link Logger#getLogger(Class) obtain a system logger}. * @param name the name for the logger * @return the logger, named afterclazz; never null */ public Logger getLogger( String name ) {...} ... }

The fact that so many of the JBoss DNA components take ExecutionContext instances gives us some interesting possibilities.

For example, one execution context instance can be used as the highest-level (or "application-level") context for all of the services

(e.g., RepositoryService, SequencingService, etc.).

Then, an execution context could be created for each user that will be performing operations, and that user's context can

be passed around to not only provide security information about the user but also to allow the activities being performed

to be recorded for user feedback, monitoring and/or auditing purposes.

As mentioned above, the starting point is to create a default execution context, which will have all the default components:

ExecutionContextcontext = newExecutionContext();

Once you have this top-level context, you can start creating subcontexts with different components,

and different security contexts. (Of course, you can create a subcontext from any instance.)

To create a subcontext, simply use one of the with(...) methods on the parent context. We'll show examples

later on in this chapter.

JBoss DNA uses a simple abstraction layer to isolate it from the security infrastructure used within an application. A SecurityContext represents the context of an authenticated user, and is defined as an interface:

public interface SecurityContext { /** * Get the name of the authenticated user. * @return the authenticated user's name */ String getUserName(); /** * Determine whether the authenticated user has the given role. * @param roleName the name of the role to check * @return true if the user has the role and is logged in; false otherwise */ boolean hasRole( String roleName ); /** * Logs the user out of the authentication mechanism. * For some authentication mechanisms, this will be implemented as a no-op. */ void logout(); }

Every ExecutionContext has a SecurityContext instance, though the top-level (default) execution context does not represent

an authenticated user. But you can create a subcontext for a user authenticated via JAAS:

ExecutionContextcontext = ... String username = ... char[] password = ... String jaasRealm = ... SecurityContext securityContext = new JaasSecurityContext(jaasRealm, username, password);ExecutionContextuserContext = context.with(securityContext);

In the case of JAAS, you might not have the password but would rather prompt the user. In that case, simply create a subcontext with a different security context:

ExecutionContextcontext = ... String jaasRealm = ... CallbackHandler callbackHandler = ...ExecutionContextuserContext = context.with(new JaasSecurityContext(jaasRealm, callbackHandler);

Of course if your application has a non-JAAS authentication and authorization system, you can simply provide your own implementation of SecurityContext:

ExecutionContextcontext = ... SecurityContext mySecurityContext = ...ExecutionContextmyAppContext = context.with(mySecurityContext);

These ExecutionContext then represent the authenticated user in any component that uses the context.

One of the SecurityContext implementations provided by JBoss DNA is the JaasSecurityContext, which delegates any authentication

or authorization requests to a Java Authentication and Authorization Service (JAAS)

provider. This is the standard approach for authenticating and authorizing in Java.

There are quite a few JAAS providers available, but one of the best and most powerful providers is JBoss Security, the open source security framework used by JBoss. JBoss Security offers a number of JAAS login modules, including:

User-Roles Login Module is a simple

javax.security.auth.login.LoginContextimplementation that uses usernames and passwords stored in a properties file.Client Login Module prompts the user for their username and password.

Database Server Login Module uses a JDBC database to authenticate principals and associate them with roles.

LDAP Login Module uses an LDAP directory to authenticate principals. Two implementations are available.

Certificate Login Module authenticates using X509 certificates, obtaining roles from either property files or a JDBC database.

Operating System Login Module authenticates using the operating system's mechanism.

and many others. Plus, JBoss Security also provides other capabilities, such as using XACML policies or using federated single sign-on. For more detail, see the JBoss Security project.

If JBoss DNA is being used within a web application, then it is probably desirable to reuse the security infrastructure

of the application server. This can be accomplished by implementing the SecurityContext interface with an implementation

that delegates to the HttpServletRequest. Then, for each request, create a SecurityContextCredentials

instance around your SecurityContext, and use that credentials to obtain a JCR Session.

Here is an example of the SecurityContext implementation that uses the servlet request:

@Immutable public class ServletSecurityContext implements SecurityContext { private final String userName; private final HttpServletRequest request; /** * Create a {@link ServletSecurityContext} with the supplied * {@link HttpServletRequest servlet information}. * * @param request the servlet request; may not be null */ public ServletSecurityContext( HttpServletRequest request ) { this.request = request; this.userName = request.getUserPrincipal() != null ? request.getUserPrincipal().getName() : null; } /** * Get the name of the authenticated user. * @return the authenticated user's name */ public String getUserName() { return userName; } /** * Determine whether the authenticated user has the given role. * @param roleName the name of the role to check * @return true if the user has the role and is logged in; false otherwise */ boolean hasRole( String roleName ) { request.isUserInRole(roleName); } /** * Logs the user out of the authentication mechanism. * For some authentication mechanisms, this will be implemented as a no-op. */ public void logout() { } }

Then use this to create a Session:

HttpServletRequest request = ...

Repository repository = engine.getRepository("my repository");

SecurityContext securityContext = new ServletSecurityContext(httpServletRequest);

ExecutionContext servletContext = context.with(securityContext);

We'll see later in the JCR chapter how this can be use to obtain a JCR Session for the authenticated user.

As we saw earlier, every ExecutionContext has a registry of namespaces. Namespaces are used throughout the graph API

(as we'll see soon), and the prefix associated with each namespace makes for more readable string representations.

The namespace registry tracks all of these namespaces and prefixes, and allows registrations to be added, modified, or

removed. The interface for the NamespaceRegistry shows how these operations are done:

public interface NamespaceRegistry { /** * Return the namespace URI that is currently mapped to the empty prefix. * @return the namespace URI that represents the default namespace, * or null if there is no default namespace */ String getDefaultNamespaceUri(); /** * Get the namespace URI for the supplied prefix. * @param prefix the namespace prefix * @return the namespace URI for the supplied prefix, or null if there is no * namespace currently registered to use that prefix * @throws IllegalArgumentException if the prefix is null */ String getNamespaceForPrefix( String prefix ); /** * Return the prefix used for the supplied namespace URI. * @param namespaceUri the namespace URI * @param generateIfMissing true if the namespace URI has not already been registered and the * method should auto-register the namespace with a generated prefix, or false if the * method should never auto-register the namespace * @return the prefix currently being used for the namespace, or "null" if the namespace has * not been registered and "generateIfMissing" is "false" * @throws IllegalArgumentException if the namespace URI is null * @see #isRegisteredNamespaceUri(String) */ String getPrefixForNamespaceUri( String namespaceUri, boolean generateIfMissing ); /** * Return whether there is a registered prefix for the supplied namespace URI. * @param namespaceUri the namespace URI * @return true if the supplied namespace has been registered with a prefix, or false otherwise * @throws IllegalArgumentException if the namespace URI is null */ boolean isRegisteredNamespaceUri( String namespaceUri ); /** * Register a new namespace using the supplied prefix, returning the namespace URI previously * registered under that prefix. * @param prefix the prefix for the namespace, or null if a namesapce prefix should be generated * automatically * @param namespaceUri the namespace URI * @return the namespace URI that was previously registered with the supplied prefix, or null if the * prefix was not previously bound to a namespace URI * @throws IllegalArgumentException if the namespace URI is null */ String register( String prefix, String namespaceUri ); /** * Unregister the namespace with the supplied URI. * @param namespaceUri the namespace URI * @return true if the namespace was removed, or false if the namespace was not registered * @throws IllegalArgumentException if the namespace URI is null * @throws NamespaceException if there is a problem unregistering the namespace */ boolean unregister( String namespaceUri ); /** * Obtain the set of namespaces that are registered. * @return the set of namespace URIs; never null */ Set<String> getRegisteredNamespaceUris(); /** * Obtain a snapshot of all of the {@link Namespace namespaces} registered at the time this method * is called. The resulting set is immutable, and will not reflect changes made to the registry. * @return an immutable set of Namespace objects reflecting a snapshot of the registry; never null */ Set<Namespace> getNamespaces(); }

This interfaces exposes Namespace objects that are immutable:

@Immutable interface Namespace extends Comparable<Namespace> { /** * Get the prefix for the namespace * @return the prefix; never null but possibly the empty string */ String getPrefix(); /** * Get the URI for the namespace * @return the namespace URI; never null but possibly the empty string */ String getNamespaceUri(); }

JBoss DNA actually uses several implementations of NamespaceRegistry, but you can even implement your own

and create ExecutionContexts that uses it:

NamespaceRegistry myRegistry = ...

ExecutionContext contextWithMyRegistry = context.with(myRegistry);

JBoss DNA is designed around extensions: sequencers, connectors, MIME type detectors, and class loader factories. The core part of JBoss DNA is relatively small and has few dependencies, while many of the "interesting" components are extensions that plug into and are used by different parts of the core or by layers above (such as the JCR implementation). The core doesn't really care what the extensions do or what external libraries they require, as long as the extension fulfills its end of the extension contract.

This means that you only need the core modules of JBoss DNA on the application classpath, while the extensions do not have to be on the application classpath. And because the core modules of JBoss DNA have few dependencies, the risk of JBoss DNA libraries conflicting with the application's are lower. Extensions, on the other hand, will likely have a lot of unique dependencies. By separating the core of JBoss DNA from the class loaders used to load the extensions, your application is isolated from the extensions and their dependencies.

Note

Of course, you can put all the JARs on the application classpath, too. This is what the examples in the Getting Started document do.

But in this case, how does JBoss DNA load all the extension classes? You may have noticed earlier that

ExecutionContext implements the ClassLoaderFactory interface with a single method:

public interface ClassLoaderFactory { /** * Get a class loader given the supplied classpath. The meaning of the classpath * is implementation-dependent. * @param classpath the classpath to use * @return the class loader; may not be null */ ClassLoader getClassLoader( String... classpath ); }

This means that any component that has a reference to an ExecutionContext has the ability to create a

class loader with a supplied class path. As we'll see later, the connectors and sequencers are all

defined with a class and optional class path. This is where that class path comes in.

The actual meaning of the class path, however, is a function of the implementation. JBoss DNA uses

a StandardClassLoaderFactory that just loads the classes using the Thread's current context

class loader (or, if there is none, delegates to the class loader that loaded the StandardClassLoaderFactory class).

Of course, it's possible to implement other ClassLoaderFactory with other implementations.

Then, just create a subcontext with your implementation:

ClassLoaderFactory myClassLoaderFactory = ...

ExecutionContext contextWithMyClassLoaderFactories = context.with(myClassLoaderFactory);

Note

The dna-classloader-maven project has a class loader factory implementation that parses the names into

Maven coordinates, then uses those coordinates

to look up artifacts in a Maven 2 repository. The artifact's POM file is used to determine the dependencies,

which is done transitively to obtain the complete dependency graph. The resulting class loader has access

to these artifacts in dependency order.

This class loader is not ready for use, however, since there is no tooling to help populate the repository.

JBoss DNA often needs the ability to determine the MIME type for some binary content. When uploading content into a repository, we may want to add the MIME type as metadata. Or, we may want to make some processing decisions based upon the MIME type. So, JBoss DNA created a small pluggable framework for determining the MIME type by using the name of the file (e.g., extensions) and/or by reading the actual content.

JBoss DNA defines a MimeTypeDetector interface that abstracts the implementation that actually determines the MIME type given the name and content. If the detector is able to determine the MIME type, it simply returns it as a string. If not, it merely returns null. Note, however, that a detector must be thread-safe. Here is the interface:

@ThreadSafe public interface MimeTypeDetector { /** * Returns the MIME-type of a data source, using its supplied content and/or its supplied name, * depending upon the implementation. If the MIME-type cannot be determined, either a "default" * MIME-type ornullmay be returned, where the former will prevent earlier * registered MIME-type detectors from being consulted. * * @param name The name of the data source; may benull. * @param content The content of the data source; may benull. * @return The MIME-type of the data source, or optionallynull* if the MIME-type could not be determined. * @throwsIOExceptionIf an error occurs reading the supplied content. */ String mimeTypeOf( String name, InputStream content ) throwsIOException; }

To use a detector, simply invoke the method and supply the name of the content (e.g., the name of the file, with the extension) and the InputStream to the actual binary content. The result is a String containing the MIME type (e.g., "text/plain") or null if the MIME type cannot be determined. Note that the name or InputStream may be null, making this a very versatile utility.

Once again, you can obtain a MimeTypeDetector from the ExecutionContext. JBoss DNA provides and uses by

default an implementation that uses only the name (the content is ignored), looking at the name's extension

and looking for a match in a small listing (loaded from the org/jboss/dna/graph/mime.types loaded from the classpath).

You can add extensions by copying this file, adding or correcting the entries, and then placing your updated file in the

expected location on the classpath.

Of course, you can always use a different MimeTypeDetector by creating a subcontext and supplying your implementation:

MimeTypeDetector myDetector = ...

ExecutionContext contextWithMyDetector = context.with(myDetector);

Two other components are made available by the ExecutionContext. The PropertyFactory is an interface

that can be used to create Property instances, which are used throughout the graph API. The ValueFactories

interface provides access to a number of different factories for different kinds of property values.

These will be discussed in much more detail in the next chapter. But like the other components that

are in an ExecutionContext, you can create subcontexts with different implementations:

PropertyFactory myPropertyFactory = ...

ExecutionContext contextWithMyPropertyFactory = context.with(myPropertyFactory);

and

ValueFactories myValueFactories = ...

ExecutionContext contextWithMyValueFactories = context.with(myValueFactories);

Of course, implementing your own factories is a pretty advanced topic, and it will likely be something you do not need to do in your application.

In this chapter, we introduced the ExecutionContext as a representation of the environment in which many of the

JBoss DNA components operate. ExecutionContext provides a very simple but powerful way to inject commonly-needed

facilities throughout the system.

In the next chapter, we'll dive into Graph API and will introduce the notion of nodes, paths, names, and properties, that are so essential and used throughout JBoss DNA.

One of the central concepts within JBoss DNA is that of its graph model. Information is structured into a hierarchy of nodes with properties, where nodes in the hierarchy are identified by their path (and/or identifier properties). Properties are identified by a name that incorporates a namespace and local name, and contain one or more property values consisting of normal Java strings, names, paths, URIs, booleans, longs, doubles, decimals, binary content, dates, UUIDs, references to other nodes, or any other serializable object.

This graph model is used throughout JBoss DNA: it forms the basis for the connector framework, it is used by the sequencing framework for the generated output, and it is what the JCR implementation uses internally to access and operate on the repository content.

Therefore, this chapter provides essential information that will be essential to really understanding how the connectors, sequencers, and other JBoss DNA features work.

JBoss DNA uses names to identify quite a few different types of objects. As we'll soon see, each property of a node is given by a name, and each segment in a path is comprised of a name. Therefore, names are a very important concept.

JBoss DNA names consist of a local part and are qualified with a namespaces. The local part can consist of

any character, and the namespace is identified by a URI. Namespaces were introduced in the

previous chapter and are managed by the ExecutionContext's

namespace registry. Namespaces help reduce the risk of

clashes in names that have an equivalent same local part.

All names are immutable, which means that once a Name object is created, it will never change. This characteristic makes it much easier to write thread-safe code - the objects never change and therefore require no locks or synchronization to guarantee atomic reads. This is a technique that is more and more often found in newer languages and frameworks that simplify concurrent operations.

Name is also a interface rather than a concrete class:

@Immutable public interface Name extends Comparable<Name>, Serializable, Readable { /** * Get the local name part of this qualified name. * @return the local name; never null */ String getLocalName(); /** * Get the URI for the namespace used in this qualified name. * @return the URI; never null but possibly empty */ String getNamespaceUri(); }

This means that you need to use a factory to create Name instances.

The use of a factory may seem like a disadvantage and unnecessary complexity, but there actually are several benefits. First, it hides the concrete implementations, which is very appealing if an optimized implementation can be chosen for particular situations. It also simplifies the usage, since Name only has a few methods. Third, it allows the factory to cache or pool instances where appropriate to help conserve memory. Finally, the very same factory actually serves as a conversion mechanism from other forms. We'll actually see more of this later in this chapter, when we talk about other kinds of property values.

The factory for creating Name objects is called NameFactory and is available within the ExecutionContext,

via the getValueFactories() method. But before we see that, let's first discuss how names are represented as strings.

We'll see how names are used later one, but one more point to make: Name is both serializable and comparable,

and all implementations should support equals(...) and hashCode() so that Name can

be used as a key in a hash-based map. Name also extends the Readable interface, which we'll learn

more about later in this chapter.

Another important concept in JBoss DNA's graph model is that of a path, which provides a way of locating a node within a hierarchy. JBoss DNA's Path object is an immutable ordered sequence of Path.Segment objects. A small portion of the interface is shown here:

@Immutable public interface Path extends Comparable<Path>, Iterable<Path.Segment>, Serializable, Readable { /** * Return the number of segments in this path. * @return the number of path segments */ public int size(); /** * Return whether this path represents the root path. * @return true if this path is the root path, or false otherwise */ public boolean isRoot(); /** * {@inheritDoc} */ public Iterator<Path.Segment> iterator(); /** * Obtain a copy of the segments in this path. None of the segments are encoded. * @return the array of segments as a copy */ public Path.Segment[] getSegmentsArray(); /** * Get an unmodifiable list of the path segments. * @return the unmodifiable list of path segments; never null */ public List<Path.Segment> getSegmentsList(); /** * Get the last segment in this path. * @return the last segment, or null if the path is empty */ public Path.Segment getLastSegment(); /** * Get the segment at the supplied index. * @param index the index * @return the segment * @throws IndexOutOfBoundsException if the index is out of bounds */ public Path.Segment getSegment( int index ); /** * Return an iterator that walks the paths from the root path down to this path. This method * always returns at least one path (the root returns an iterator containing itself). * @return the path iterator; never null */ public Iterator<Path> pathsFromRoot(); /** * Return a new path consisting of the segments starting atbeginIndexindex (inclusive). * This is equivalent to callingpath.subpath(beginIndex,path.size()-1). * @param beginIndex the beginning index, inclusive. * @return the specified subpath * @exception IndexOutOfBoundsException if thebeginIndexis negative or larger * than the length of thisPathobject */ public Path subpath( int beginIndex ); /** * Return a new path consisting of the segments between thebeginIndexindex (inclusive) * and theendIndexindex (exclusive). * @param beginIndex the beginning index, inclusive. * @param endIndex the ending index, exclusive. * @return the specified subpath * @exception IndexOutOfBoundsException if thebeginIndexis negative, or *endIndexis larger than the length of thisPath* object, orbeginIndexis larger thanendIndex. */ public Path subpath( int beginIndex, int endIndex ); ... }

There are actually quite a few methods (not shown above) for obtaining related paths: the path of the parent, the path of an ancestor, resolving a path relative to this path, normalizing a path (by removing "." and ".." segments), finding the lowest common ancestor shared with another path, etc. There are also a number of methods that compare the path with others, including determining whether a path is above, equal to, or below this path.

Each Path.Segment is an immutable pair of a Name and same-name-sibling (SNS) index. When two sibling nodes have the same name, then the first sibling will have SNS index of "1" and the second will be given a SNS index of "2". (This mirrors the same-name-sibling index behavior of JCR paths.)

@Immutable public static interface Path.Segment extends Cloneable, Comparable<Path.Segment>, Serializable, Readable { /** * Get the name component of this segment. * @return the segment's name */ public Name getName(); /** * Get the index for this segment, which will be 1 by default. * @return the index */ public int getIndex(); /** * Return whether this segment has an index that is not "1" * @return true if this segment has an index, or false otherwise. */ public boolean hasIndex(); /** * Return whether this segment is a self-reference (or "."). * @return true if the segment is a self-reference, or false otherwise. */ public boolean isSelfReference(); /** * Return whether this segment is a reference to a parent (or "..") * @return true if the segment is a parent-reference, or false otherwise. */ public boolean isParentReference(); }

Like Name, the only way to create a Path or a Path.Segment is to use the PathFactory, which is available

within the ExecutionContext via the getValueFactories() method.